Research

My research interests include Fairness, Explainability and Privacy in AI, Large-Scale Optimization, Bayesian Optimization, and Causal Inference on Networks.

Responsible AI

Reducing unfairness and bias across large-scale AI models and AI driven products.

Biases can arise from several avenues in AI driven products. Whether it is intrinsic bias in the training data that has been collected or bias introduced during model training. In many situations these bias gets percolated through cyclic looping. We are focusing on building methodologies and systems that tackle such bias in end-to-end AI systems to make it fair. Towards that we have developed a few frameworks focusing on large-scale AI applications:

- Pushing the limits of fairness impossibility: Who’s the fairest of them all? : We develop a framework to reconcile the contradictory impossibility results of fairness through the lens of optimizaiton. (NeurIPS 2022)

- Achieving Fairness via Post-Processing in Web-Scale Recommender Systems : We focus on large-scale ranking problems and develop fairness mitigation strategies for them. (FAccT 2022)

- Long-term Dynamics of Fairness Interventions: We focus on a connection recommendation problems and study long-term impact of fairness interventions (AIES 2022)

- Evaluating Fairness using Permutation Tests: We develop a detection mechanism via a flexible framework that allows practioners to identify significant bias exhibited by machine learning models via rigorous statistical hypothesis tests. (KDD 2020)

- Detection and Mitigation of Algorithmic Bias via Predictive Rate Parity : We study how to measure and mitigate algorithmic bias along the fairness defition of Predictive Rate Parity. (Preprint)

- A Framework for Fairness in Two-Sided Marketplaces: We propose a definition and develop an end-to-end framework for achieving fairness while building marketplace products at scale. (Preprint)

We are also working in the space of Explainable AI and some of the recent work can be found in this blog where we describe how we are making our AI systems more transparent and explanable. Finally, just giving explanations may not be enough and it is critical to develop techniques that leverage these explanations towards model improvments. Towards that, we introduce:

- Heterogenous Calibration: A method that leverages heterogeniety from the data to improve the overall model performance. (Preprint)

Large-Scale Optimization

Solving extremely large linear programs arising from several web-focused applications.

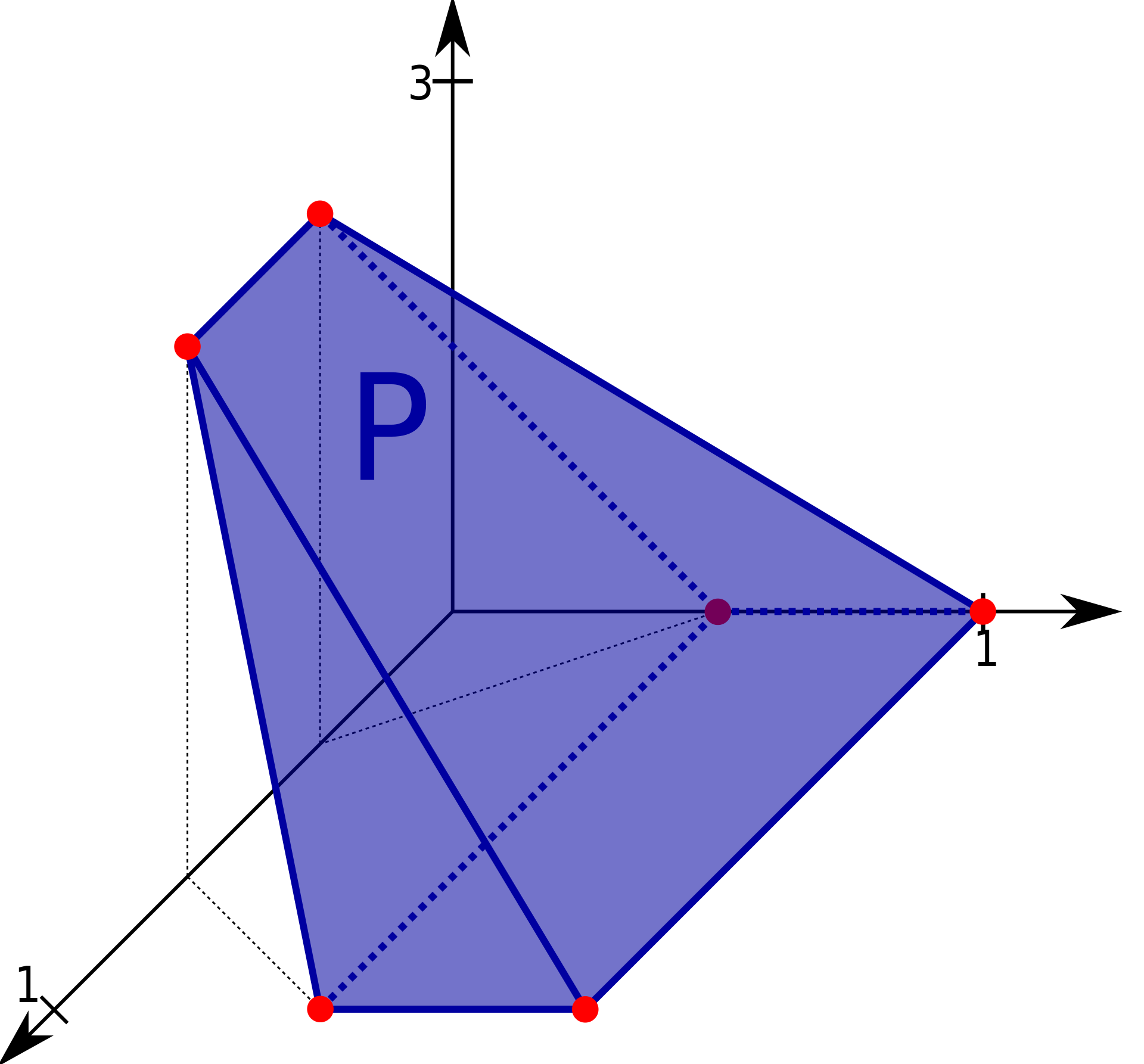

Key problems arising in web applications (with millions of users and thousands of items) can be formulated as Linear Programs (LP) involving billions to trillions of decision variables and constraints. Despite the appeal of LP formulations, solving problems at these scales is well beyond the capabilities of existing LP solvers. Through the years, I've worked in this space to develop large-scale optimization solvers:

- A Light-speed Linear Program Solver for Personalized Recommendation with Diversity Constraints: We develop highly efficient near online algorithm which allows us to inject diversity constraints to large-scale recommender systems (NeurIPS 2022 Workshop).

- Efficient Vertex-Oriented Polytopic Projection for Web-scale Applications: We develop highly efficient algorithms to scale up and generalize the prior state-of-the-art solver (AAAI 2022).

- ECLIPSE: An extreme-scale LP Solver for structured problems such as matching and multi-objective optimization ( ICML 2020 )

- QCQP via Low-Dicrepancy Sequences: Solving quadratically constrained quadratic programs arising from modeling dependencies in various applications (NeurIPS 2017 )

- Videos:

Bayesian Optimization

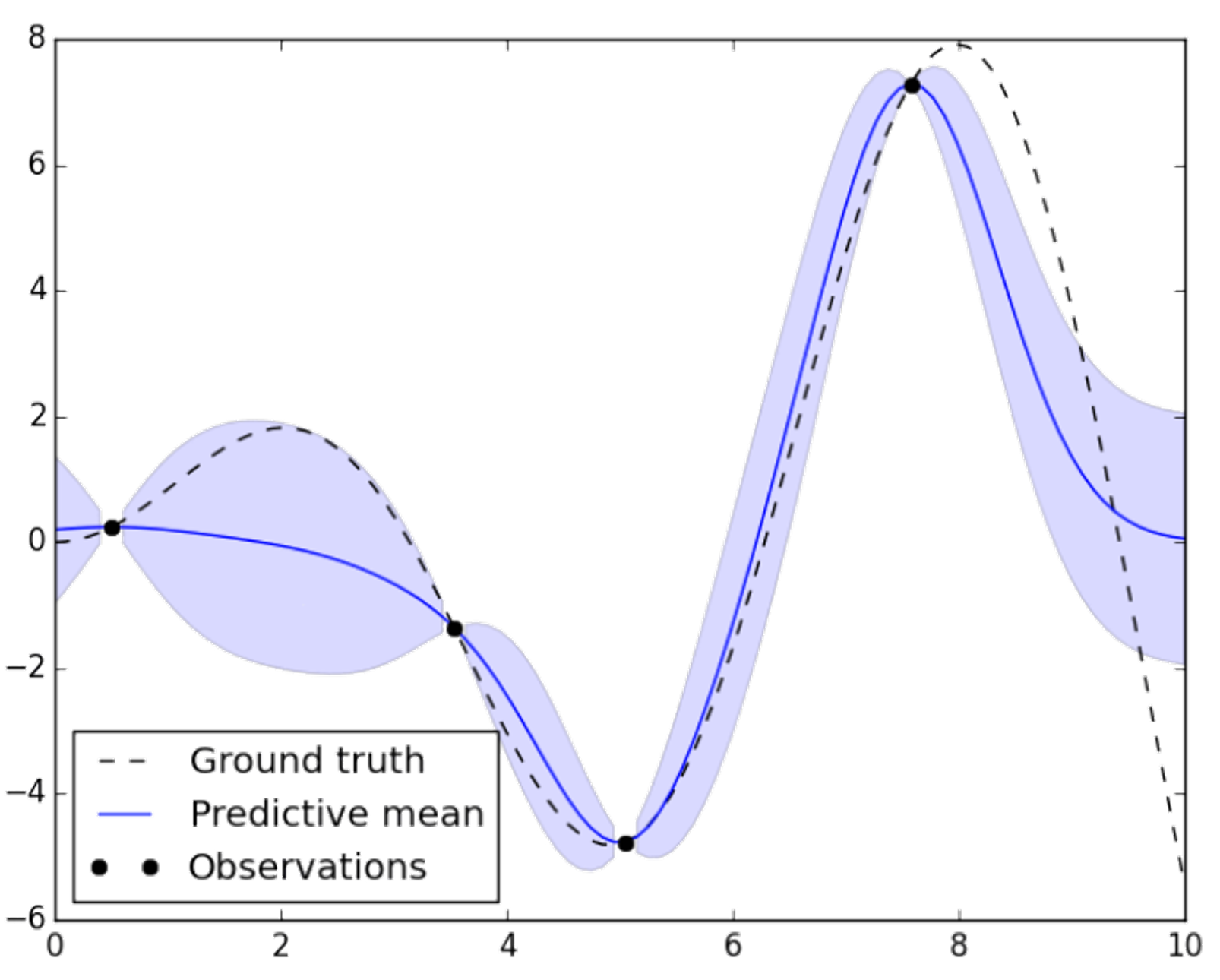

Tuning parameters for optimizing offline and online machine learning systems.

In any machine learning system, there exists parameters which when appropriately tuned can drastically change the efficiency and predictive accuracy of the model. This is an growing area of research that focuses on doing this automatically without any human in the loop. We develop on the exisiting literature to design systems that can easily scale to online problems as well as large ML pipelines offlines.

- Online Parameter Selection for Web-based Ranking: We develop a mechanism to automatically choose optimal parameters to balance multiple objectives in a large-scale ranking system. (KDD 2018)

- Adaptive Rate of Convergence of Thompson Sampling for Gaussian Process Optimization: We derive a theoretical rate of convergence for the Thompson Sampling algorithm (Preprint)

- Videos:

Causal Inference on Networks

Accurate hypothesis testing in the presense of interference and heterogeneity.

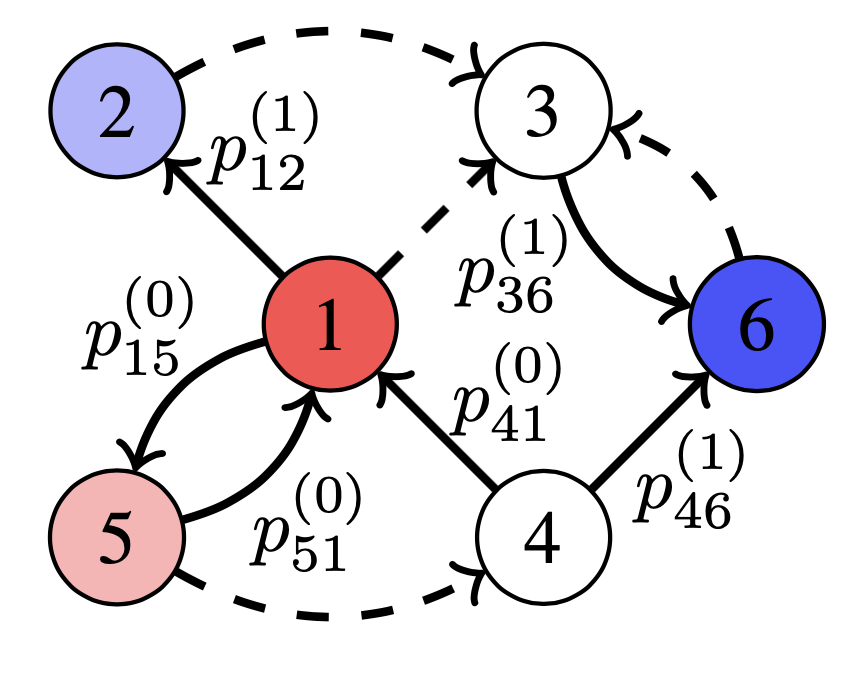

Since we primarily work on network and graphical data, it is of utmost importance to consider problems with a strong network effect or intereference. This is especially crucial in developing methodologies for statistical tests. The usual assumptions of A/B testing breaks in such conditions and we focus on developing techniques that can give us accurate estimates of causal effect even in the presence of interference in dense large networks.

- Generalized Causal Tree for Uplift Modeling: We derive a generalized version of the causal tree algorithm that can simulatenous tackle multiple treatments and generate heterogeneous causal effect across different treatments with statistical consistency. The applications include any upflift modeling use cases that considers multiple treatments. (Preprint)

- Heterogenous Causal Effects: We derive a framework to personalize and optimize of decision parameters in web-based systems via heterogenous causal effects. We are able to show that by capturing the heterogenous member behavior we can drastical improve the overall metrics for a large-scale AI system. (The Web Conference WWW 2021)

- OASIS: We introduce OASIS, a mechanism for A/B testing in dense large-scale networks. We design an approximate randomized controlled experiment by solving an optimization problem and then apply an importance sampling adjustment to correct in bias in order to estimate the causal effect. (NeurIPS 2020 Spotlight)